Coverage Results

This section discusses how much of the known text similarity was found by the systems. As they have various strengths and weaknesses, it is not possible to boil down the results to a single number that could easily be compared. Rather, the focus is on different aspects that will be discussed in detail. All tables in this section show the averages of the evaluation, therefore the maximum possible score is 5 and the minimum possible score is 0. Boldface indicates the maximum value achieved per each line, providing an answer to the question as to which system performed best for this specific criterion. All the values are shaded from red (worst) to dark green (best) with yellow being intermediate.

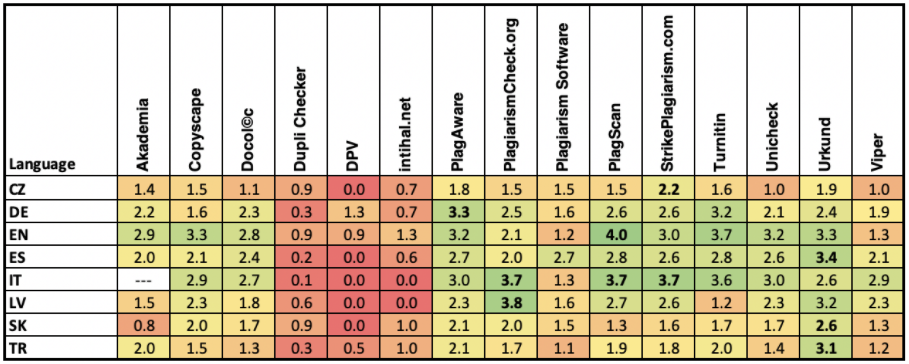

Language Comparison

Table 1 shows the aggregated results of the language comparisons based on the language sets. It can be seen that most of the systems performed better for English, Italian, Spanish, and German, whereas the results for Latvian, Slovak, Czech, and Turkish languages are poorer in general. The only system which found a Czech student thesis from 2010 which is publicly available from a university web-page, was StrikePlagiarism.com. The Slovak paper in an open-access journal was not found by any of the systems. Urkund was the only system that found an open-access book in Turkish. It is worth noting that a Turkish system, intihal.net, did not find this Turkish source.

Table 1. Coverage results according to language

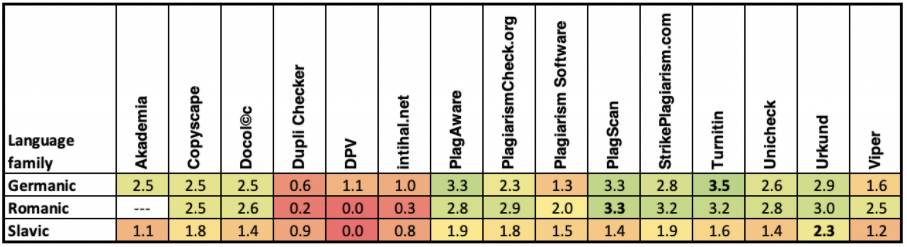

Besides the individual languages, we also evaluated language groups according to a standard linguistic classification, that is, Germanic (English and German), Romanic (Italian and Spanish), and Slavic (Czech and Slovak). Table 2 shows the results for these language subgroups. Systems achieved better results with Germanic and Italic languages, their results are comparable. The results for Slavic languages are noticeably worse.

Table 2. Coverage results according to the language subgroups

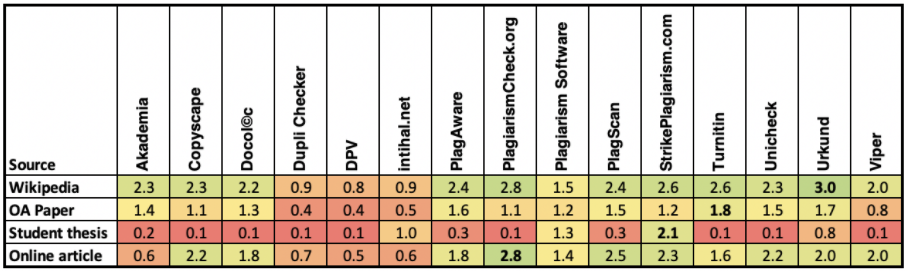

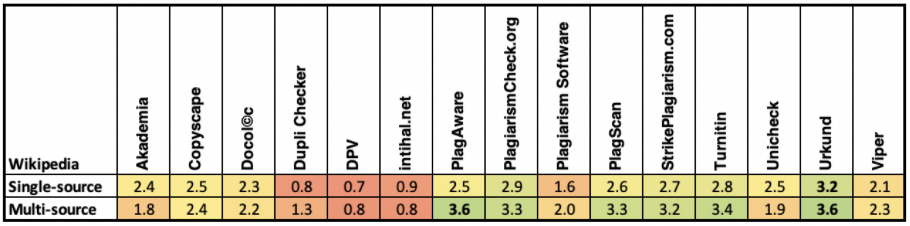

Types of Plagiarism Sources

This subsection discusses the differences between various types of sources with the results given in Table 3. The testing set contained Wikipedia extracts, open-access papers, student theses, and online documents such as blog posts. The systems generally yielded best results for Wikipedia sources. The scores between the systems vary due to their ability to detect paraphrased Wikipedia articles. Urkund scored the best for Wikipedia, Turnitin found the most open access papers, StrikePlagiarism.com scored by the best in the detection of student theses and PlagiarismCheck.org gave the best result for online articles.

Table 3. Coverage results according to the type of source

Since it is assumed that Wikipedia is an important source for student papers, the Wikipedia results were examined in more detail. Table 4 summarizes the results from 3 x 8 single-source documents (one article per language) and Wikipedia scores from multi-source documents containing one-fifth of the text taken from the current version of Wikipedia. In general, most of the systems are able to find similarities to the text that has been copied and pasted from Wikipedia.

Table 4. Coverage results for Wikipedia sources only

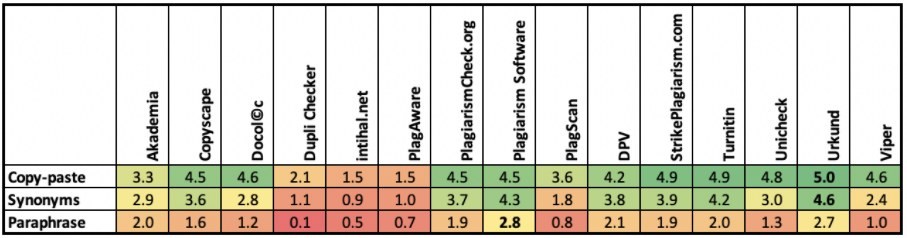

Plagiarism Methods

The same aggregation as was done in Table 4 for Wikipedia was also done over all 16 single-source and eight multi-source documents. Not only copy & paste, synonym replacement and manual paraphrase were examined, but also translation plagiarism.

Translations were done from English to all languages, as well as from Slovak to Czech, from Czech to Slovak and from Russian to Latvian. The results are shown in Table 5, which confirms that software performs worse on synonym replacement and manual paraphrase plagiarism.

Table 5. Coverage results by plagiarism method

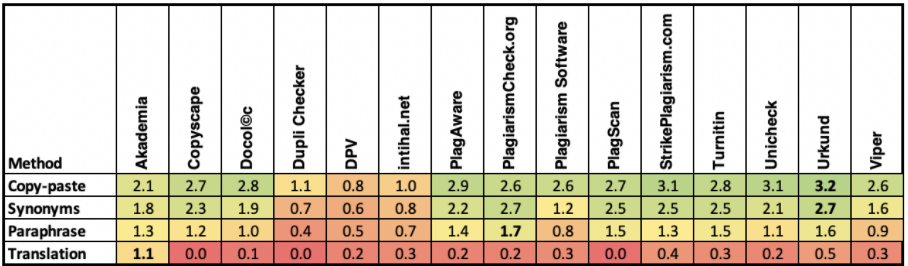

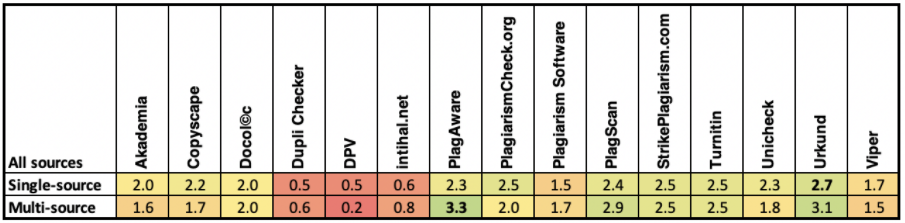

Single-Source vs. Multi-Source Documents

One scenario that was considered in the test was when a text is compiled from short passages taken from multiple sources. Surprisingly, some systems performed differently for these two scenarios (see Table 6). To remove a bias caused by different types of sources, the Wikipedia-only portions were also examined in isolation (see Table 7), the results are consistent in both cases.

Table 6. Coverage results for single-source and multi-source documents

Table 7. Coverage results for Wikipedia in single-source and multi-source documents

Usability Results

The usability of the systems was evaluated using 23 objective criteria which were divided into three groups of criteria related to the system workflow process, the presentation of the results, and additional aspects. The points were assigned based on researcher findings during a specific period of the time.

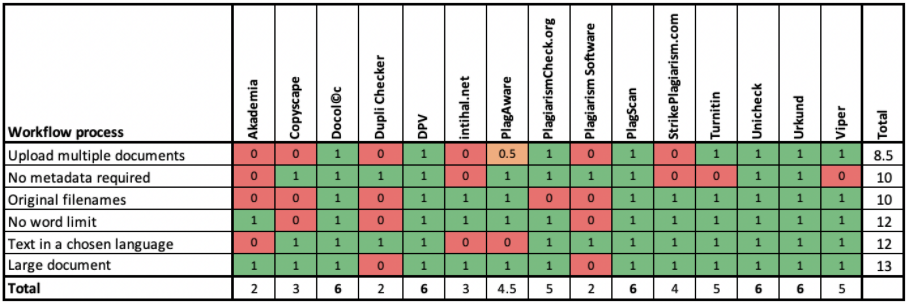

Workflow Process Usability

The results are summarized in Table 8. With respect to the workflow process, five systems were assigned the highest score in this category. The scores of only five systems were equal to or less than 3. Moreover, the most supported features are the processing of large documents (13 systems), as well as displaying text in the chosen language and having no word limits (12 systems). Uploading multiple documents is a less supported feature, which is unfortunate, as it is very important for educational institutions to be able to test several documents at the same time.

Table 8. Usability evaluation: Workflow process

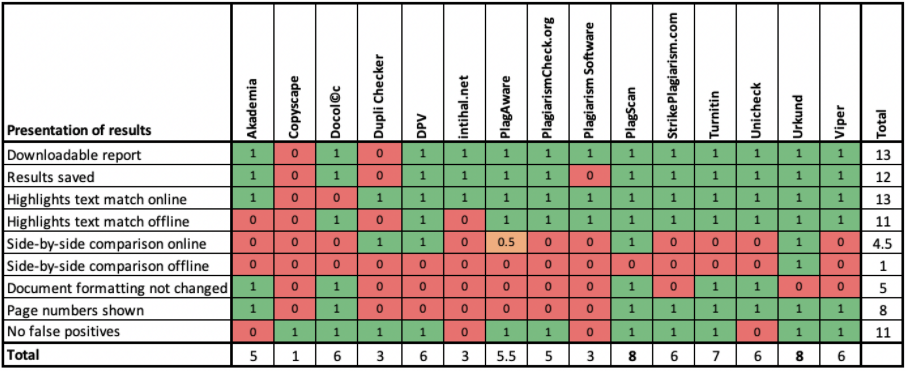

Result Presentation Usability

None of the systems was able to get the highest score in the usability group related to the test results. Two systems (PlagScan and Urkund) support almost all features, but six systems support half or fewer features. The most supported features are the possibility to download result reports and highlighting matched passages in the online report. Less supported features are a side-by-side demonstration of evidence in the downloaded report and in the online report, as well as keeping document formatting.

Table 9. Usability evaluation: Presentation of results

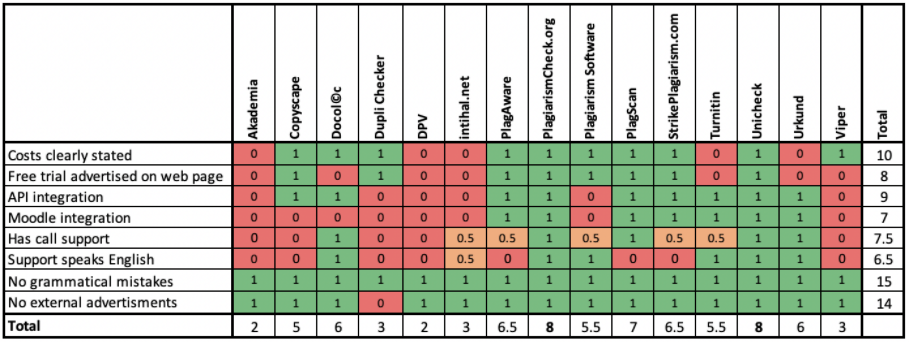

Other Usability Aspects

As shown in Table 10, only PlagiarismCheck.org and Unicheck fulfilled all criteria. Five systems were only able to support less than half of the defined features. The most supported features were no grammatical mistakes seen and no external advertisements. Problematic areas are not stating the system costs clearly, unclear possible integration with Moodle, and the lack of provision of call support in English.

Table 10. Usability evaluation: Other usability aspects